An Artificial Intelligence Just Finished The Math Section Of The SAT, And Only Scored Below Average

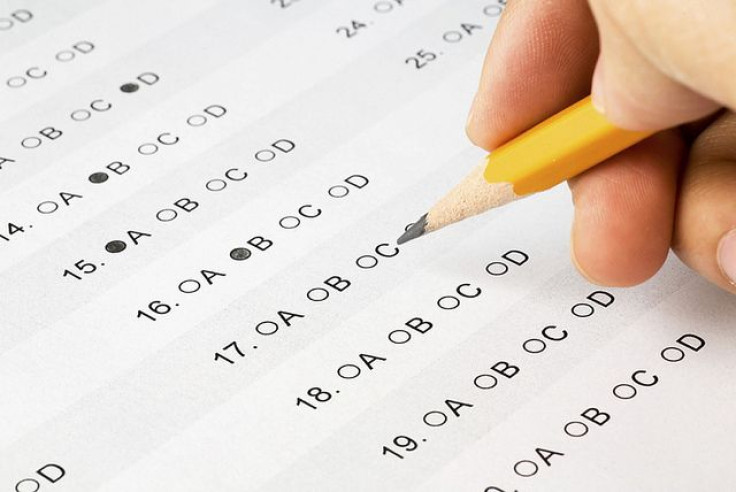

Think back to high school, during what might’ve been the most stressful time in your life. You’ve just sat down for the SAT, you have your #2 pencils sharpened, your erasers handy, and a fine sheet of sweat upon your brow. Three hours later you’ve either aced or failed the test, and maybe or maybe not sunk all of your college aspirations. Now, imagine a robot taking the test and getting a basically average score, and you’ll find yourself exactly where we are today in terms of the strength of our artificial intelligence (AI). Whether that makes you happy or terrified is up to you.

The latest step forward in the eventual world dominance of the machines comes from the Allen Institute for Artificial Intelligence (AI2) in collaboration with the University of Washington, which has created an AI, called GeoS, capable of getting a pretty average score on the math section of the SAT. With a score of 500 out of 800 possible points, GeoS scored about 49 percent, which comes relatively close to the average high schooler’s score of 513.

Now, you may be thinking, “Computers are really good at math, so I am not surprised.” Good, you shouldn’t be. What should surprise you, however, was the way that GeoS took the test. Instead of being fed all the information on the SAT in language that the program could easily understand, GeoS “looked” at the test the same way you did all those years ago. It saw the same diagrams and charts and graphs that you did. It interpreted them the same way you did, and came up with a set of formulas that it believes are most likely to align with the problem. Since the formulas that GeoS came up with are in a language it can understand, it’s able to solve the question pretty quickly. Then, it goes through the multiple choice answers and finds the one that matches the answer it came up with.

"Our biggest challenge was converting the question to a computer-understandable language," Ali Farhadi, an assistant professor of computer science and engineering at the University of Washington and research manager at AI2, said in a statement. "One needs to go beyond standard pattern-matching approaches for problems like solving geometry questions that require in-depth understanding of text, diagram, and reasoning."

Before you start arming your fallout shelter, GeoS wasn’t able to answer ever single question. In fact, it only answered about half of the questions on the test. But when it did answer, it was scarily accurate, answering correctly 96 percent of the time. Farhadi believes this is the next step in the evolution of a true AI — a computer so advanced it can think like a human.

It remains to be seen what the system is capable of, but we do know it’s incapable of acceptance into the school that created it. The University of Washington’s average SAT math score is 580.