Google Glass Vs. Autism: How Face-Tracking And Emotion Response Are Putting 18-Year-Old Catalin Voss On The Map

Imagine, if you will, not having the ability to read a person’s emotions — not being able to see upturned lips and immediately register, smile. To your emotive self, that possibility seems bleak at best. But it’s one of the principal challenges facing people with autism spectrum disorder (ASD). And it’s a challenge that one Stanford University student has decided to overcome with the help of Google’s latest technology, Google Glass.

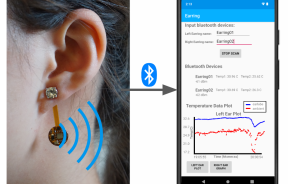

A lot of people have much to gain from Google Glass, as the possibilities of the retro-futuristic eyewear seem almost limitless. Catalin Voss, an 18-year-old from Heidelberg, Germany is testing those limits in a new project called Sension. Co-founded with Jonathan Yan during the pair’s freshman year at Stanford, Sension will allow people with ASD to lock-in on a person’s face and let Google Glass determine the person’s emotion via the device’s webcam.

Eye-tracking technology to diagnose autism isn’t a new procedure. Doctors frequently test a new baby’s gaze to see how fast it responds and if it can comfortably settle on another person’s eye regions — a point of difficulty for many with ASD.

Sension, which is still in the experimental phase, takes the technology a step further. People with ASD won’t struggle to read the face of their conversation partner; instead, they can read the heads up display (HUD), which they’ve trained on the person’s face, and instantly see the word “Happy,” “Surprised,” or “Upset.”

“Anything that can be used to facilitate social understanding in people with autism is potentially beneficial,” Derek Ott, professor in UCLA’s David Geffen School of Medicine, told Wired. Ott, who is uninvolved with Sension, works in the university’s Psychiatry Division and regularly sees autistic patients.

The Story Behind Sension

Voss and Yan said their inspiration for Sension came after one too many engineering courses failed to maintain their interest. They wanted a way to make online learning more efficient, and as Google Glass began its meteoric rise, the duo realized using a webcam was the way to go.

“We realized there had to be a better way of doing online learning,” Voss told Wired. “We wanted to create a more interactive education experience using the webcam.”

They won an $18,000 grant from a mini-startup program called Summer@Highland and soon hired coders to help them develop their first project. With the help of mentor Steve Capps — one of the brains behind the original Macintosh computer and Newton OS, and who began a relationship with Voss at 15 — the team took to developing Sension. Capps said the project “gives a good demo,” but offered little more.

“I’ve been working for 30 years in this industry and rarely have I seen a college graduate with his combination of creativity and ability and sociability — meaning he can talk,” Capps said of Voss. “Ever heard that old joke?: ‘What’s the difference between an introverted nerd and an extroverted nerd? The extroverted nerd looks at your shoes instead of his.’ He’s good at that.”

Where Sension Needs To Go

Voss and Yan have an easier time figuring out how Google Glass can help a person with ASD recognize facial expressions than they will with helping that person respond to those expressions. ASD is not limited to a deficiency in understanding; people with autism cannot reciprocate either.

How, exactly, is a person with ASD expected to react when Google Glass tells him the person across from him is happy? With a mirroring smile? For how long? Such questions still need to be teased out through Sension’s testing, Ott explained.

ASD encompasses a broad range of social deficiencies, from difficulties with basic speech on one end to people who are high-functioning but lack etiquette on the other. It has no known cause or cure, and affects about one in 88 children, according to the Centers for Disease Control and Prevention’s Autism and Developmental Disabilities Monitoring Network. People typically get diagnosed before the age of three, but depending on where they fall in the spectrum, can gain or lose certain abilities throughout their lives.

If Sension can harness the unique, thinly sliced emotions people confront every day, that one in 88 may see a pair of upturned lips and, for the first time, smile back.

“A lot of today’s social-skills training is done in an artificial setting,” Ott told Wired. “If you can do it in the moment, in the real world, it could be very beneficial.”